AI Engineer World 2024: Key Takeaways and Future Trends

AI Engineer World’s Fair, held from June 24 to June 27 in San Francisco, is one of the premier events for AI practitioners and enthusiasts. This annual conference showcases the latest advancements in AI technology and provides invaluable insights into industry trends. As an attendee, I was thrilled to absorb knowledge from the numerous talks and workshops. Here are my main takeaways and reflections.

1. Model is not the moat

The rapid advancement of Large Language Models (LLMs) was a central theme of the conference. OpenAI (GPT-4o), Google (Gemma 2.0), Anthropic (Claude 3.5 Sonnet), Mistral (8x22 and C), and Cohere (Command-R) all presented their latest models, showcasing impressive performances.

However, a key insight emerged: models themselves are not the competitive advantage. The landscape has dramatically shifted from just a year ago when OpenAI was the primary player. Now, developers have access to numerous high-quality alternatives.

key takeaways:

- Focus on building processes and workflows that leverage LLMs for specific domain use cases.

- Make your code modular to easily adapt to new model releases.

2. Autonomous Agents: Not Yet Ready for Prime Time

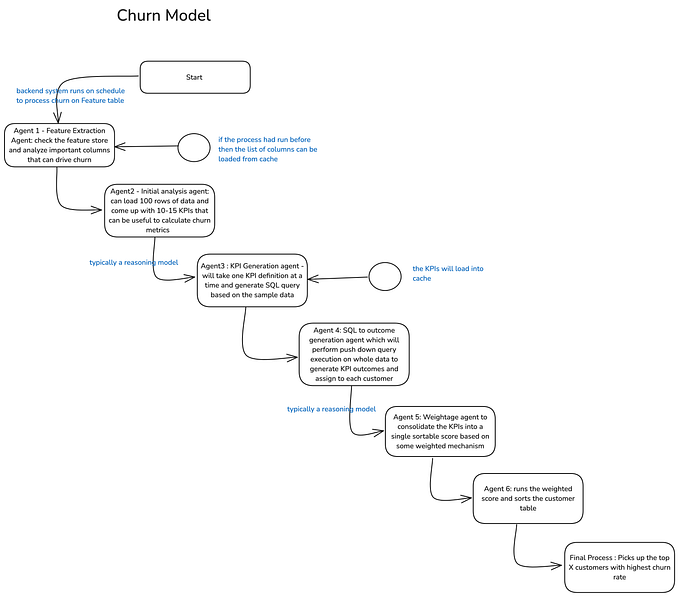

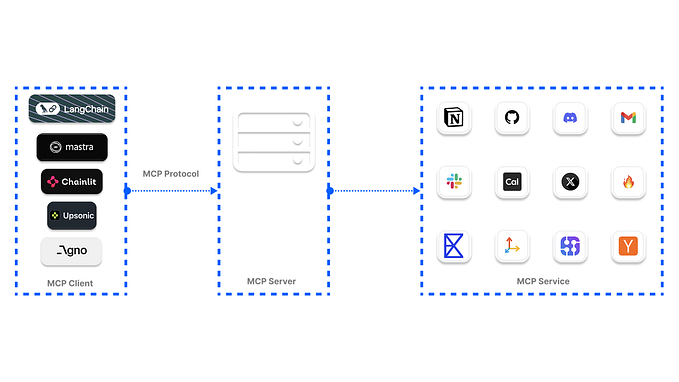

Agents were another hot topic at the conference, with presentations from Langchain, LlamaIndex, and Amazon Q. While these solutions show promise, the consensus is that agents are not yet fully production-ready.

Current state of agents:

- Handle simple, straightforward tasks well.

- Struggle with complex scenarios.

- Blindly applying the ReACT framework (giving LLMs access to all tools in a for loop) can be risky in production environments.

Recommendations:

- Balance reliability and flexibility by customizing parts of the workflow while leveraging LLMs.

- Understand your use case thoroughly to develop effective routing logic.

Interesting tools:

Here is an easy-to-follow notebook from LangChain to get your hands dirty.

Another fun demo is from Amazon Bedrock, which uses agents to play Minecraft by writing plain-text instructions. If you are interested in the details, please check out their repo.

Other notable agent companies present at the conference included crewAI, factory, Zeta Labs, and Deepgram.

3. Multimodality and Open Models: The Future of AI

This expansive topic yielded several key observations:

- Multimodal models are ascendant: These models, which can process multiple types of data (text, images, audio), represent the future of AI. One big benefit is the latency reduction. For example, for voice assistance, human conversation response is less than 500ms. The traditional approach involves several steps (speech-to-text, text generation, text-to-speech) that take time to respond to. Multimodel can dramatically speed up the process. GPT 4o live demo at the conference feels literally like talking with a human.

- Rise of open models: Google (Gemma 2.0), Mistral (8x22 and C), and Cohere (Command-R) showcased models that compete well on various benchmarks. The general advice I heard repeatedly is to start building your solution first with reliable and performant APIs such as GPT 4o and Claude Sonnet 3.5. Once you get more users, you can think about how to fine-tune the open model for domain-specific simple tasks. For easy fine-tuning and deployment, check out this notebook from OctoAI.

- Advancements in model interpretability: Anthropic’s “Golden Gate Claude” project shows promise for addressing harmful content. By unveiling relationships between neuron weights, features, and outputs, researchers can potentially adjust model behavior by tweaking specific neurons. This is a very interesting paper and I highly recommend that you read it.

4. Everyone cares about LLM evaluation

Effective evaluation of LLMs emerged as a crucial topic. While it builds on established concepts like MLOps and DevOps, it requires some unique approaches. To get started, I recommend the following framework from Hamel Husain.

- Do not use generic data for testing. You need to create domain-specific datasets, which will also be your first fine-tuning datasets in the future.

- Logging traces are absolutely critical for analysis and monitoring.

- Consider unit tests for actionable or numerical outputs.

- Combine LLM-based judges with human-in-the-loop approaches for text evaluation.

- Lastly, Re-evaluate prompts when changing models or versions.

5. Speed up CPU inferences: New language and tools

While GPUs offer superior performance, budget constraints often necessitate CPU-based solutions. Several promising tools for speeding up CPU inference were highlighted:

- LlamaFile: This is an open-source project from Mozilla that makes CPU inferences 30–500% faster. It can turn open-source LLMs into multi-platform executables. You can run it locally and privately without even access to the internet.

- MAX from Modular: It’s a new AI platform that includes the MAX Engine, MAX Serving, and the Mojo programming language. The company claims the new solution is ~5x faster than Llama.cpp and Mojo is 100–1000 faster than Python. Although there are some debates about the exact performance comparison, I believe it’s still an interesting new language to keep an eye on, especially the potential feature of configuring GPU with Pythonic code instead of CUDA. If you are interested, please check out their official repo.

Closing Thoughts: The Evolving Landscape of AI Development

The AI industry is progressing at an incredible pace. As AI developers, we must remain adaptable, continuously learn, and innovate. This conference underscored the importance of staying current with the latest tools and methodologies while also thinking critically about their application in real-world scenarios.

The future of software development is being reshaped by AI, and events like AI Engineer World provide crucial insights into this transformation. I left the conference feeling both excited and challenged by the possibilities that lie ahead.

For those interested in diving deeper, I encourage you to explore the official conference website and YouTube channel for more in-depth content from the various talks and workshops.